Boost US Application Speed: Cloud Networking Performance Tweaks

Cloud Networking Performance: Boost Your US Application Speed by 30% with These Tweaks by optimizing network configurations, leveraging content delivery networks, and implementing efficient caching strategies.

Is your US-based application running slower than it should? Cloud Networking Performance: Boost Your US Application Speed by 30% with These Tweaks can drastically improve user experience and efficiency. Let’s dive into actionable strategies to supercharge your cloud network.

Understanding Cloud Networking Performance Bottlenecks in the US

Cloud networking in the US faces unique performance challenges due to geographical distances and varying infrastructure qualities. Understanding these bottlenecks is crucial for optimizing your application’s speed and responsiveness. Let’s explore these challenges in detail.

Many factors can contribute to slow application speeds. Latency, or the delay in data transfer, is a major concern, especially with users spread across the country. Additionally, insufficient bandwidth and inefficient routing can significantly impact performance.

Latency Issues Across the US

Latency is often the primary culprit behind sluggish application performance. The physical distance data must travel contributes significantly to this delay. Consider that data sent from New York to Los Angeles incurs inherent latency due to sheer distance.

Bandwidth Constraints and Their Impact

Even with fast internet connections, bandwidth bottlenecks can occur if your cloud network isn’t properly configured. Insufficient bandwidth restricts the amount of data that can be transmitted at one time, leading to slower load times and a poorer user experience.

- Optimize Data Transfer Protocols: Use protocols designed for speed and efficiency.

- Implement Compression Techniques: Reduce data size before transmission.

- Regular Network Monitoring: Identify and address bandwidth bottlenecks promptly.

Identifying and addressing these bottlenecks is the first step towards enhancing cloud networking performance. By understanding the specific challenges your application faces, you can implement targeted solutions to improve speed and responsiveness.

Optimizing Network Configurations for Faster Application Delivery

Optimizing network configurations is essential for achieving faster application delivery in the US. Fine-tuning your network settings can dramatically reduce latency and improve overall performance. Here’s a look at key configuration tweaks.

Proper configuration ensures that data packets are routed efficiently and that network resources are allocated optimally. Through careful planning and implementation, you can significantly enhance your application’s speed and reliability.

Prioritizing Quality of Service (QoS)

Implementing QoS policies allows you to prioritize certain types of network traffic over others. This ensures that critical application data receives preferential treatment, reducing latency and improving responsiveness.

Load Balancing Strategies for Optimal Resource Use

Load balancing distributes incoming network traffic across multiple servers, preventing any single server from becoming overwhelmed. This not only improves performance but also enhances reliability and availability.

- Use Round Robin DNS: Distribute traffic evenly across servers.

- Implement Health Checks: Ensure only healthy servers receive traffic.

- Monitor Server Load: Adjust load balancing configurations as needed.

By strategically optimizing network configurations, you can drastically reduce latency, improve resource utilization, and deliver a superior application experience to your users across the US.

Leveraging Content Delivery Networks (CDNs) Across the US

Content Delivery Networks (CDNs) are crucial for improving application speed by caching content closer to users. Leveraging CDNs across the US can dramatically reduce latency, enhance performance, and provide a smoother user experience. Let’s explore how CDNs work and their benefits.

CDNs store copies of your application’s content on servers located in various geographical locations. When a user accesses your application, the content is served from the nearest CDN server, minimizing the distance data must travel.

How CDNs Reduce Latency and Improve Speed

By caching content in multiple locations, CDNs significantly reduce latency. Users receive content from a server that is geographically closer, resulting in faster load times and a more responsive application.

Choosing the Right CDN Provider for Your Needs

Selecting the right CDN provider is crucial for optimizing performance. Consider factors such as the CDN’s geographical coverage, performance metrics, pricing, and support services. Research and compare different providers to find the best fit for your application.

- Evaluate CDN Coverage: Ensure comprehensive coverage across the US.

- Check Performance Metrics: Look for low latency and high throughput.

- Consider Pricing and Support: Find a provider that aligns with your budget and support needs.

Effectively leveraging CDNs is a game-changer for application performance, particularly in a geographically diverse region like the US. By caching content closer to users, you can deliver a faster, more responsive, and more satisfying experience.

Implementing Efficient Caching Strategies for Enhanced Performance

Efficient caching strategies are vital for enhancing application performance and reducing server load. By storing frequently accessed data in cache, you can significantly decrease response times and improve the overall user experience. Let’s discuss effective caching techniques.

Caching involves storing data temporarily in a readily accessible location, allowing subsequent requests for that data to be served from the cache rather than the original source. This reduces the need to repeatedly fetch data, leading to faster response times.

Browser Caching for Static Content

Browser caching instructs users’ browsers to store static content locally, such as images, stylesheets, and JavaScript files. This ensures that these resources are loaded from the browser’s cache on subsequent visits, reducing the load on your servers and improving page load times.

Server-Side Caching for Dynamic Content

Server-side caching involves storing dynamic content on your servers, reducing the need to regenerate it for each request. This can significantly improve performance for applications that rely heavily on dynamic data.

- Use Object Caching: Store frequently accessed objects in memory.

- Implement Full-Page Caching: Cache entire pages for anonymous users.

- Set Appropriate Cache Expiration Times: Ensure data is refreshed periodically.

Implementing efficient caching strategies is fundamental for delivering a fast and responsive application experience. By leveraging browser and server-side caching techniques, you can significantly reduce latency, decrease server load, and boost overall performance.

Monitoring and Analyzing Cloud Networking Metrics Regularly

Regular monitoring and analysis of cloud networking metrics are essential for identifying performance issues and opportunities for optimization. Tracking key metrics allows you to proactively address potential bottlenecks and ensure your application runs smoothly. Here’s why it’s important and what to monitor.

Continuous monitoring provides valuable insights into your network’s performance, allowing you to detect anomalies, identify trends, and make informed decisions to improve speed and reliability.

Key Metrics to Monitor for Optimal Performance

Several key metrics should be monitored to ensure optimal cloud networking performance. These include latency, bandwidth utilization, packet loss, server response times, and error rates. Tracking these metrics provides a comprehensive view of your network’s health.

Using Monitoring Tools to Identify Performance Issues

Various monitoring tools are available to help you track and analyze cloud networking metrics. These tools provide real-time insights into your network’s performance, allowing you to quickly identify and address any issues that arise.

- Implement Real-Time Monitoring: Track metrics continuously.

- Set Up Alerts: Receive notifications when performance thresholds are exceeded.

- Analyze Historical Data: Identify trends and patterns.

By consistently monitoring and analyzing cloud networking metrics, you can proactively identify and resolve performance issues, ensuring your application delivers a fast, reliable, and satisfying experience to users across the US.

Advanced Techniques: Network Segmentation and SD-WAN

For even greater control and optimization, consider advanced techniques like network segmentation and SD-WAN (Software-Defined Wide Area Network). These approaches can provide enhanced security, improved performance, and greater flexibility in managing your cloud network. Let’s delve into these advanced strategies.

These techniques offer a sophisticated way to manage and optimize your cloud network, providing greater control over traffic flow, security, and resource allocation.

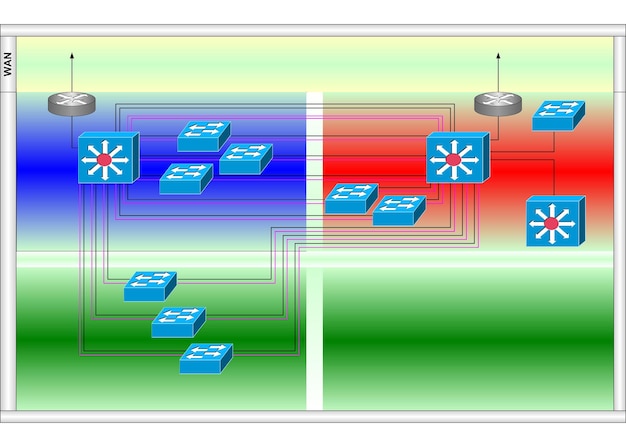

Network Segmentation for Enhanced Security and Performance

Network segmentation involves dividing your network into smaller, isolated segments. This enhances security by limiting the impact of potential breaches and improves performance by reducing network congestion and streamlining traffic flow.

SD-WAN for Optimized Wide Area Network Management

SD-WAN technology provides a centralized and automated approach to managing your wide area network. It optimizes traffic routing, improves network visibility, and enhances overall performance by dynamically adapting to changing network conditions.

- Implement Application-Aware Routing: Prioritize traffic based on application requirements.

- Utilize Centralized Management: Simplify network administration.

- Leverage Dynamic Path Selection: Route traffic based on real-time network conditions.

By implementing advanced techniques like network segmentation and SD-WAN, you can achieve even greater levels of performance, security, and flexibility in managing your cloud network, ensuring your application delivers an exceptional experience to users across the US.

| Key Point | Brief Description |

|---|---|

| 🚀 Network Optimization | Fine-tune configurations for faster data delivery. |

| 🌐 CDN Utilization | Cache content closer to users for reduced latency. |

| 💾 Caching Strategies | Implement caching to decrease response times. |

| 📊 Monitoring Metrics | Regularly analyze metrics to identify issues. |

Frequently Asked Questions

▼

Cloud networking performance refers to the efficiency and speed with which data is transmitted and processed in a cloud-based network. Optimizing it involves minimizing latency and maximizing bandwidth.

▼

A CDN caches content on geographically distributed servers, reducing the distance data travels to users. This minimizes latency and improves loading times for applications and websites.

▼

Key metrics include latency, bandwidth utilization, packet loss, and server response times. Monitoring these metrics helps identify and address performance issues in real-time.

▼

Caching reduces the load on servers by storing frequently accessed data, allowing quicker retrieval and reducing response times. This improves the overall user experience.

▼

Network segmentation divides a network into smaller, isolated segments, enhancing security and improving performance by reducing congestion and streamlining traffic. It also limits the impact of security breaches.

Conclusion

Improving cloud networking performance requires a multifaceted approach encompassing network configuration, CDN utilization, caching strategies, and continuous monitoring. By implementing these tweaks, US-based applications can achieve significant speed improvements, delivering a better user experience and driving business success.